[This is a re-post from my old blog, where this appeared March 8, 2014]

Several people have been reminding us that we need to perform well powered studies. It’s true this is a problem, because low power reduces the informational value of studies (a paper Ellen Evers and I wrote about this, has now appeared in Perspectives on Psychological Science, and is available here). If you happen to have a very large sample, good for you. But here I want to prevent people from drawing the incorrect reverse inference that the larger the sample size you collect, the better. Instead, I want to discuss when it’s good enough.

I believe we should not let statisticians define the word ‘better’. The larger the sample size, the more accurate parameter estimates (such as means and effect sizes in a sample). Although accurate parameter estimates are always a goal when you perform a study, they might not always be your most important goal. I should admit, I’m a member of an almost extinct species that still dares to publically admit that I think Null-Hypothesis Significance Tests have their use. Another (deceased) member of this species was Cohen, but for some reason, 2682 people cite his paper where he argues against NHST, and only 20 people have ever cited his rejoinder where he admits NHST has its use.

Let’s say I want to examine whether I’m violating a cultural norm if I walk around naked when I do my grocery shopping. My null hypothesis is no one will mind. By the time I reach the fruit section, I’ve received 25 distressed, surprised, and slightly disgusted glances (and perhaps two appreciative nods, I would like to imagine). Now beyond the rather empty statement that more data is always better, I think it would be wise if I get dressed at this point. My question is answered. I don’t exactly know how strong the cultural norm is, but I know I shouldn’t walk around naked.

Even if you are not too fond of NHST, there are times when your ethical board will stop you from collecting too much data (and rightly so). We can expect our participants to volunteer (or perhaps receive a modest compensation) to participate in scientific research because they want to contribute to science, but their contribution should be worthwhile, and balanced against their suffering. Let’s say you want to know whether fear increases or decreases depending on the brightness of the room. You put people in a room with either 100 or 1000 lux, and show them 100 movie clips from the greatest horror films of all time. Your ethical board will probably tell you that the mild suffering you are inducing is worth it, in terms of statistical power, from participants 50 to 100, but not so much for participants 700 to 750, and will ask you stop when your data is convincing enough.

Finally, imagine a tax payer who walks up to you, hands you enough money to collect data from 1000 participants, and tells you: “give me some knowledge”. You can either spend all the money to perform one very accurate study, or 4 or 5 less accurate (but still pretty informational) studies. What should you do? I think it would be a waste of the tax payers money if you spend all the money on a single experiment.

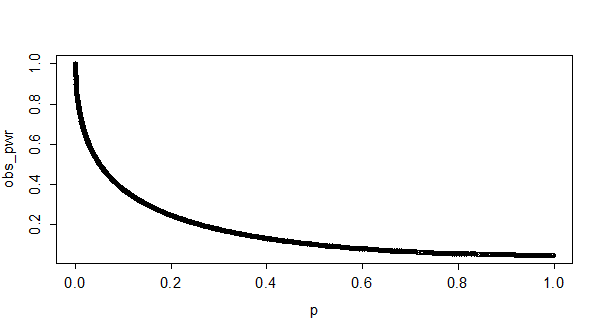

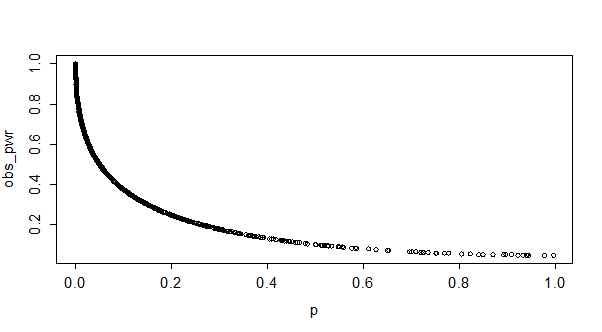

So, when are studies informational (or convincing) enough? And how do you know how many participants you need to collect, if you have almost no idea about the size of the effect you are investigating?

Here’s what you need to do. First, determine your SESOI (Smallest Effect Size Of Interest). Perhaps you know you can never (or are simply not willing to) collect more than 300 people in individual sessions. Perhaps your research is more applied, and allows for a cost benefit analysis that requires an effect is larger than some value. Perhaps you are working in a field that does not simply exist of directional predictions (X > Y) but allows for stronger predictions (e.g., your theoretical model predicts the effect size should lie between r = .6 and r = .7).

After you have determined this value, collect data. After you have a reasonable number of observations (say 50 in each condition) analyze the data. If it’s not significant, but still above your SESOI, collect some more data. If (say after 120 participants in each condition) the data is significant, and your question is suited for a NHST framework, stop the data collection, write up your results, and share them. Make sure that, when performing the analyses and writing up the results, you control the Type 1 error rate. That’s very easy, and is often done in other research areas such as medicine. I’ve explained how to do it, and provide step-by-step guides, here (the paper has now appeared in the European Journal of Social Psychology). If you prefer to reach a specific width of a confidence interval, or really like Bayesian statistics, determine alternative reasons to stop the data collection, and continue looking at your data until your goal is reached.

The recent surge of interest in things like effect sizes, confidence intervals, and power is great. But we need to be aware, especially when communicating this to researchers who’ve spend less time reading up on statistics, that we tell them they should change the way they work, without telling them exactly how they should change the way they work. Saying more data is always better might be a little demotivating for people to hear, because it means it is never good enough. Instead, we need to help people to make it as easy as possible to improve the way they work, by giving advice that is as concrete as possible.